Context Preparation vs. Data Preparation: Key Differences, Components & Implementation in 2026

Why does context preparation matter?

Permalink to “Why does context preparation matter?”For decades, data preparation dominated the machine learning workflow as:

- ML models needed clean, structured training datasets

- Technical accuracy was the primary goal

- Focus was on removing errors, standardizing formats, handling missing values, etc.

What has changed with generative AI?

Permalink to “What has changed with generative AI?”Large language models (LLMs) understand language but not business meaning. When a sales manager asks an AI analyst “how many customers do we have,” the model needs more than clean data.

Critical business context requirements for the genAI era:

- Business rules that govern data interpretation

- Organizational knowledge that humans intuitively possess

- Semantic understanding of domain-specific terminology

Recent research from Anthropic showed that enterprises failing to gather and operationalize contextual data struggle to deploy sophisticated AI systems.

Without focusing on context, 80% of AI projects fail as AI lacks the organizational knowledge humans take for granted. Modern data catalogs like Atlan’s context layer address this by enriching technical metadata with business definitions, relationships, and governance rules.

Rather than just cleaning data, teams now prepare context that teaches AI what data means and how it should be used.

How did context engineering emerge as the defining AI skill in 2026?

Permalink to “How did context engineering emerge as the defining AI skill in 2026?”The term “context engineering” exploded in mid-2025, popularized by AI leaders like Andrej Karpathy and Shopify CEO Tobi Lütke.

“I really like the term ‘context engineering’ over prompt engineering. It describes the core skill better: the art of providing all the context for the task to be plausibly solvable by the LLM.” - Tobi Lütke

What drove the shift from prompt engineering to context engineering? 3 major catalysts.

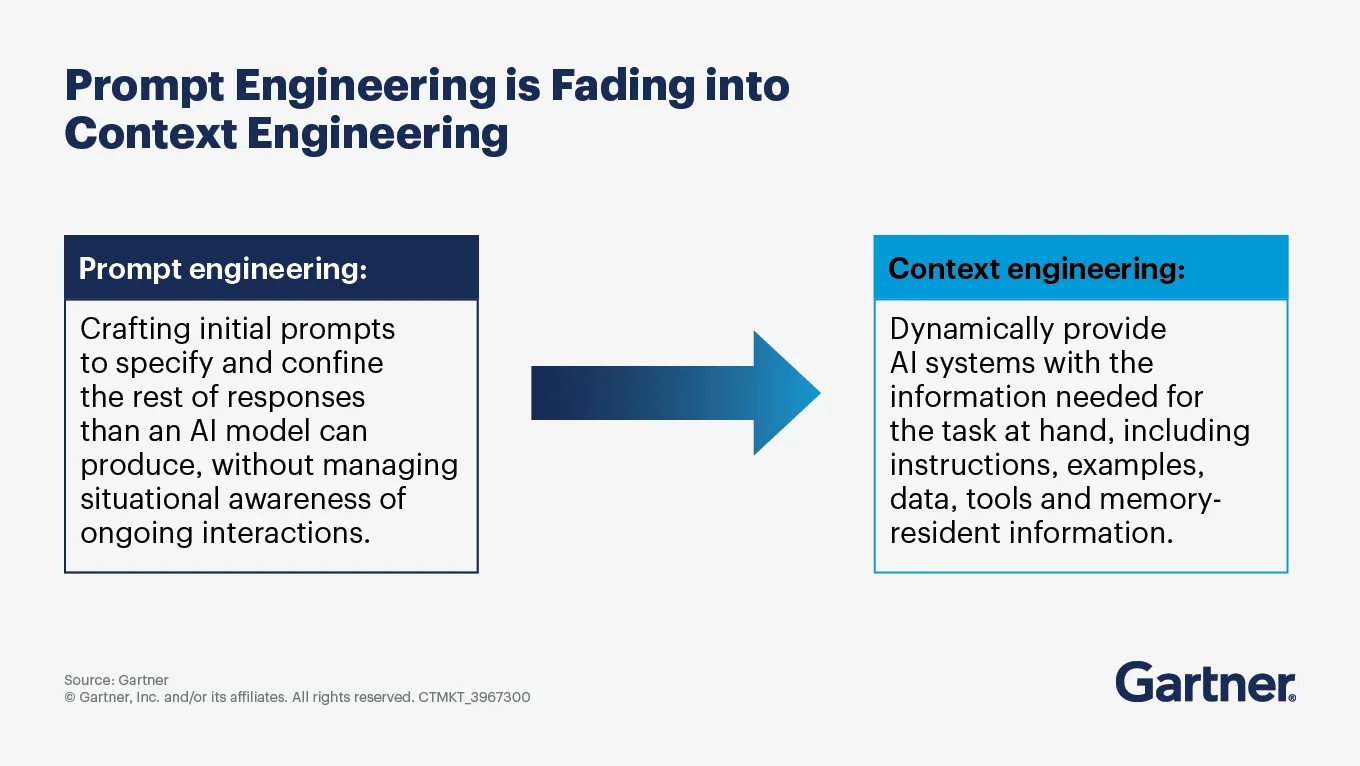

Permalink to “What drove the shift from prompt engineering to context engineering? 3 major catalysts.”In July 2025, Gartner declared “context engineering is in, and prompt engineering is out,” urging AI leaders to build context-aware architectures. Three major catalysts were instrumental in driving this shift.

1. Prompt engineering couldn’t scale to production

Prompt engineering—the practice of crafting input instructions to improve large language model outputs—worked well for early demos and prototypes but showed clear limitations when teams attempted to scale AI systems into production.

Prompts alone cannot supply the persistent state, memory, retrieval, and system context that real-world applications require. This gap has driven a shift toward context engineering, where prompts become just one component within broader, context-aware architectures.

A 2025 Live Mint explainer captures this transition clearly:

“As AI agents evolve, prompts will no longer be crafted manually for each task but generated dynamically through context pipelines. Prompts remain crucial building blocks but are embedded within larger contexts of retrieval, memory, workflows, and safety layers.”

Gartner reinforces this view, noting that enterprises must design context-aware architectures rather than rely on prompt text alone to achieve production-grade AI systems.

Why prompt engineering is being overshadowed by context engineering. Source: Gartner.

2. RAG’s limitations exposed at scale

Retrieval-Augmented Generation (RAG) initially seemed perfect: point your model at documents and let it retrieve what it needs. In practice, many early RAG implementations struggled once deployed at scale, due to a lack of context governance.

“RAG works adequately for one-off questions over static documents. But the architecture breaks down when AI agents need to operate across multiple sessions, maintain context over time, or distinguish what they’ve observed from what they believe.” - A 2025 VentureBeat article on why RAG is failing

RAG fails at scale because organizations treat it as an LLM feature rather than a platform-level discipline.

Its effectiveness depends heavily on the quality, structure, and governance of the retrieval layer and surrounding system design. When retrievers surface irrelevant, incomplete, or outdated information, models generate confidently incorrect answers. This is largely due to a lack of mechanisms to govern context, track history, or enforce relevance and validity.

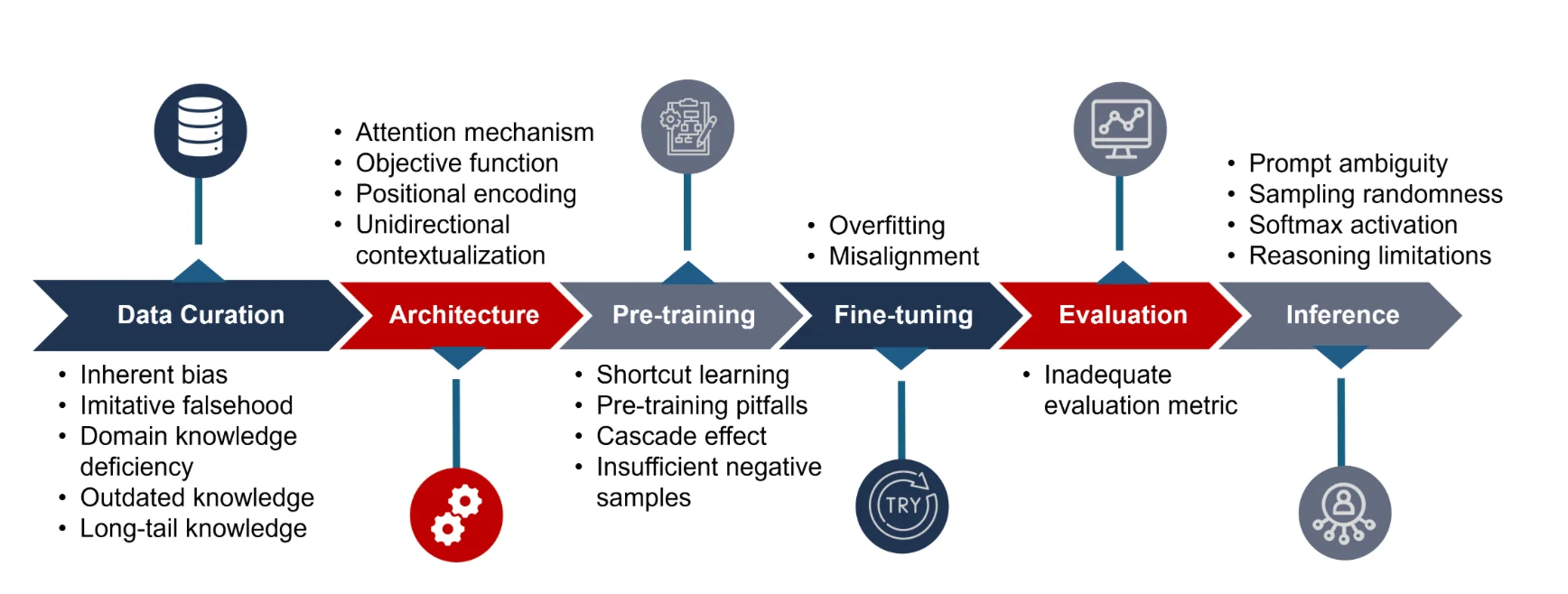

3. The hallucination epidemic

LLMs frequently generate plausible but factually incorrect information, also called hallucination. Research across industries shows that hallucination rates range from 50-82% across all LLM models.

The main causes of hallucination throughout an LLM’s development lifecycle. Source: Arxiv.

Hallucinations undermine trust and reliability in AI model outputs, making it difficult to scale AI solutions as stakeholders hesitate to adopt systems that confidently assert incorrect facts.

This realization reframed the problem: reducing hallucinations required better context preparation, not just better prompts or larger models. Gartner explicitly connects hallucination reduction to context engineering, noting that “strategically managing context for each step of an agentic workflow can help ensure that AI agents are accurate, efficient, and capable of handling complex, real-world tasks”.

Gartner defines context engineering as designing and structuring the relevant data, workflows and environment so AI systems can understand intent, make better decisions and deliver contextual, enterprise-aligned outcomes.

In practice, this means:

- Architecting complete information environments, not isolated prompts

- Using active metadata platforms to continuously capture and supply context

- Enriching raw data with business meaning and governance signals

- Delivering the right context at the right moment in the AI workflow

Organizations like Workday achieved 5x accuracy improvements with structured context layers, demonstrating that hallucinations are not inevitable, but often a consequence of insufficient context preparation.

What are the core components of context preparation workflows?

Permalink to “What are the core components of context preparation workflows?”Building effective context preparation requires four foundational components.

1. Context extraction

Permalink to “1. Context extraction”Extraction mines unwritten rules from existing behavior rather than manual documentation. This includes:

- Communication patterns from Slack messages and email

- Decision histories from approval workflows

- Usage data showing actual behavior

- Business rules inferred from consistent patterns

Example: If sales teams consistently discount products by 15% for enterprise customers, that pattern becomes codified context rather than tribal knowledge.

2. Context products

Permalink to “2. Context products”Context products establish minimum viable context for specific domains rather than attempting comprehensive documentation by providing:

- Relevant data assets for the domain

- Verified queries that return correct results

- Business term definitions

- Decision logic specific to that domain

Quality threshold: Context products get iteratively tested with golden questions until they pass accuracy thresholds, typically 80% correctness before production deployment.

3. Feedback loops

Permalink to “3. Feedback loops”Enable continuous improvement through human-in-the-loop refinement.

How feedback loops work:

- User interacts with AI agent

- User clarifies or corrects AI response

- Correction feeds back into context layer

- System builds institutional memory

- Conflicts get resolved automatically

Example: When a user clarifies that “revenue” means “net revenue excluding returns,” that correction becomes permanent context.

4. Context stores

Permalink to “4. Context stores”Context stores provide the technical foundation with multiple specialized storage systems.

Here’s what the modern context layer architecture typically includes.

| Store Type | Purpose | What It Holds |

|---|---|---|

| Graph stores | Relationships | Who reports to whom, team connections, approval flows |

| Vector stores | Unstructured knowledge | Documents, conversations, historical decisions |

| Rules engines | Business logic | Compliance rules, policies, if-then procedures |

| Time-series stores | Temporal patterns | Trends, historical patterns, audit trails |

The interface layer unifies these systems, making retrieval feel seamless whether context comes from knowledge graphs, business glossaries, or operational metadata.

Context preparation in RAG pipelines vs. traditional data preparation: What’s the difference?

Permalink to “Context preparation in RAG pipelines vs. traditional data preparation: What’s the difference?”As RAG became foundational to grounding large language models, where preparation happens started to matter as much as the data that’s prepared.

Traditional ML workflows prepared data before training models, but in the RAG era, context preparation happens at inference time, shaping the information that AI systems actually use for reasoning.

Key distinction:

- Traditional data preparation prepares clean, structured training datasets before a model is trained.

- Context preparation enriches and governs data at inference time, just before AI uses it to generate outputs.

This shift is part of why context engineering emerged as a solution to hallucinations and unreliable results: preparing richer, governed context reduces errors and improves trust in outcomes.

RAG pipeline anatomy

Permalink to “RAG pipeline anatomy”In RAG pipelines, data flows through three stages:

1. Retrieval

Rather than simple keyword matching, semantic metadata and enriched representations help identify genuinely relevant information.

For example, a query about “customer satisfaction” finds tables even when columns are named “csat_score”.

2. Augmentation (where context preparation delivers most value)

Retrieval by itself does not guarantee that the chunks have the necessary meaning and scope. Context preparation enriches retrieved documents with metadata that indicates validity, temporal scope, applicability, and quality.

For instance, a document might be outdated or relevant only for certain geographies; context preparation resolves these issues before generation. Without this, models may treat every retrieval as equally authoritative, increasing hallucination risk.

3. Generation

Once context is enriched and governed, the LLM uses it to produce responses that are both factually grounded and semantically appropriate.

Gartner’s 2024 Data and Analytics Summit found organizations with semantic context layers report 43% faster time-to-insight.

Data preparation vs. context preparation: A side-by-side comparison

Permalink to “Data preparation vs. context preparation: A side-by-side comparison”| Aspect | Data Preparation (ML Era) | Context Preparation (AI Era) |

|---|---|---|

| When it happens | Before model training | At inference time |

| Primary focus | Clean, structured datasets | Semantic meaning and business logic |

| Key activities | Splitting, embedding, storing | Mapping terms, defining relationships, adding metadata |

| Output format | Vector embeddings | Semantic layers + business glossaries + lineage graphs |

| Goal | Technical accuracy | Business understanding |

Why chunking matters in RAG systems

Permalink to “Why chunking matters in RAG systems”In RAG systems, models do not reason over entire documents. Instead, information is broken into smaller and more manageable units, or chunks, which are embedded, retrieved, and passed to the model at inference time. These chunks effectively become the unit of reasoning for the AI system.

This makes chunking a critical design choice. If chunks lose semantic meaning or strip away business context, even a well-trained model with high-quality retrieval will produce unreliable or misleading outputs.

Context engineering reframes chunking from a technical preprocessing step into a context preparation problem: how to preserve meaning, scope, and validity as information flows into AI systems.

Chunking strategies illustrate the distinction between data preparation vs. context preparation

Permalink to “Chunking strategies illustrate the distinction between data preparation vs. context preparation”This is where the difference between data preparation vs. context preparation becomes concrete.

Data preparation chunking (in traditional ML pipelines)

- Optimizes for technical constraints (embedding model limits).

- Uniform chunk sizes.

- Focus on fitting data into vector databases.

- The primary goal is compatibility with infrastructure, not interpretability.

Context preparation chunking (in context-engineered RAG systems)

- Preserves semantic coherence.

- Variable chunk sizes based on meaning.

- Keeps related concepts together.

- Prevents splitting definitions from context.

- The primary goal is improving retrieval precision, reducing ambiguity and preventing misleading retrievals.

Modern semantic layers operationalize this approach by combining chunking with business glossaries, lineage, and validity metadata. The result is context that remains intelligible and trustworthy at inference time.

This is why context preparation has become central to context engineering as organizations pushed AI systems into production.

As context engineering emerged as the dominant approach for improving AI reliability, organizations were forced to confront a deeper architectural question: where should knowledge live in an AI system? Let’s explore this in the next section.

Fine-tuning vs. context injection: The architectural decision

Permalink to “Fine-tuning vs. context injection: The architectural decision”Organizations building AI systems face a fundamental choice: embed knowledge into model weights through fine-tuning, or inject it dynamically through context preparation. Each approach optimizes for different scenarios, and industry patterns suggest hybrid strategies often deliver optimal results.

Fine-tuning: Embedding knowledge into the model

Permalink to “Fine-tuning: Embedding knowledge into the model”Fine-tuning involves continued training on domain-specific datasets, adjusting model parameters to internalize specialized knowledge. This excels when tasks require consistent behavior, deep domain understanding, and predictable patterns.

Use cases such as medical diagnostics, legal document classification, or financial risk categorization benefit from fine-tuning because the model learns to reason within domain-specific frameworks rather than relying on external retrieval.

However, fine-tuning demands substantial upfront compute, becomes outdated as information changes, and lacks transparency about why models generate specific outputs.

As discussed earlier, these limitations became more visible as organizations tried to scale AI systems into production environments that demanded explainability, freshness, and governance.

Context injection: Delivering knowledge at inference time

Permalink to “Context injection: Delivering knowledge at inference time”Context injection through RAG provides an alternative. Rather than retraining models, organizations maintain external knowledge bases and retrieve relevant context at inference time. This approach offers real-time access to current information without retraining cycles.

When pricing changes or policies are updated, teams update the knowledge base rather than retrain the model. Context injection also improves explainability, since responses can be traced back to specific sources.

The tradeoff is operational complexity. Retrieval introduces latency, and system accuracy depends heavily on the quality of context preparation, metadata, and governance. As earlier sections showed, weak context preparation is one of the main reasons RAG systems fail at scale.

RAFT (Retrieval-Augmented Fine-Tuning): The emerging pattern blending fine-tuning and context injection

Permalink to “RAFT (Retrieval-Augmented Fine-Tuning): The emerging pattern blending fine-tuning and context injection”In practice, many organizations now combine both approaches through retrieval-augmented fine-tuning or RAFT. Models fine-tuned on domain data operate within RAG architectures, using domain expertise to retrieve the most relevant context.

“RAFT operates by training the model to disregard any retrieved documents that do not contribute to answering a given question, thereby eliminating distractions.” - UC Berkeley researchers, Tianjun Zhang and Shishir G. Patil, on RAFT

Research shows this hybrid delivers highly accurate, context-aware outputs. A fine-tuned medical model may understand clinical terminology and reasoning patterns, while context preparation ensures it retrieves the latest research, drug interactions, or regional guidelines.

Cost and governance drive real-world choices

Permalink to “Cost and governance drive real-world choices”Cost considerations drive practical decisions. Fine-tuning requires expensive GPU compute upfront but lower inference costs once deployed. Context injection has lower initial costs but higher ongoing expenses for retrieval infrastructure and vector database operations. Organizations managing stable, well-defined domains often choose fine-tuning. Those requiring flexibility, current information, or explainability default to context preparation with RAG.

Modern platforms are blurring these boundaries. Semantic layers and context graphs provide structured business logic that both fine-tuned and RAG-based systems can leverage. Rather than choosing between approaches, leading organizations build context preparation workflows that support whichever AI architecture fits each use case.

Fine-tuning vs. Context Injection vs. Hybrid (RAFT): Comparison at a glance

Permalink to “Fine-tuning vs. Context Injection vs. Hybrid (RAFT): Comparison at a glance”| Aspect | Fine-tuning | Context Injection (RAG) | Hybrid / RAFT |

|---|---|---|---|

| What is it? | Additional training for an existing LLM with smaller, task-specific data | External knowledge bases / retrieval systems | Combination of fine-tuning and retrieved context |

| When knowledge is applied | At inference via learned weights | Dynamically at inference | Both: fine-tuned patterns + real-time context |

| Best for | Stable domains, predictable tasks, deep expertise | Frequently changing information, real-time updates, explainability | Complex domains needing both domain expertise and fresh information |

| Adaptability | Low — requires retraining to update knowledge | High — knowledge base updates propagate immediately | Medium-High — model learns patterns, retrieval keeps context current |

| Explainability / traceability | Limited — outputs tied to model internals | High — responses cite sources from retrieval | High — model reasoning + source-backed context |

| Compute / cost considerations | High upfront compute, lower inference cost | Lower initial compute, higher ongoing retrieval cost | Balanced — upfront fine-tuning + ongoing retrieval overhead |

| Latency | Low — no retrieval step | Medium — retrieval adds latency | Medium — retrieval step present but model already optimized |

| Risk of stale information | High — knowledge frozen in weights | Low — retrieval provides current context | Low — retrieval ensures fresh context even for fine-tuned models |

| Role of context engineering | Supports semantic fine-tuning, prompts still needed | Central — governs retrieval, metadata, validity, chunking | Critical — orchestrates both fine-tuned knowledge and context pipelines |

| Impact on trust / hallucination | Moderate — may reduce errors in narrow domains | High — structured context reduces hallucinations | Highest — fine-tuned reasoning + enriched context minimizes errors and boosts reliability |

How modern platforms streamline context preparation for AI

Permalink to “How modern platforms streamline context preparation for AI”Manual context documentation doesn’t scale when organizations need to prepare context for hundreds of data assets, thousands of business terms, and constantly evolving AI applications. The traditional approach of writing definitions and hoping teams keep them current fails because context changes faster than documentation processes can capture.

Modern data platforms solve this through active metadata and automated context capture. Rather than treating context as something to document, platforms like Atlan continuously monitor systems, capturing changes in real-time and inferring relationships from actual usage patterns.

For instance, when a data engineer updates a Snowflake table, lineage automatically refreshes, downstream users receive notifications, and quality checks trigger without manual intervention.

The key innovation is treating context as shared infrastructure rather than application-specific configuration. Enterprise context layers extend this principle across the organization. Instead of each BI tool, AI agent, or analytics platform maintaining separate definitions, a unified context layer becomes the single source of truth.

The architectural advantage comes from bidirectional context flow. Enriched metadata created in Atlan flows back into source systems like Snowflake and Databricks, ensuring context stays synchronized everywhere teams work. Column-level lineage traces exactly which inputs power each metric, enabling AI agents to assess data quality and understand dependencies before using information.

Real stories from real customers: Building context layers for AI

Permalink to “Real stories from real customers: Building context layers for AI”Workday co-builds the semantic foundation for AI with Atlan

Permalink to “Workday co-builds the semantic foundation for AI with Atlan”“All of the work that we did to get to a shared language at Workday can be leveraged by AI via Atlan’s MCP server…as part of Atlan’s AI Labs, we’re co-building the semantic layer that AI needs with new constructs, like context products.” - Joe DosSantos, VP of Enterprise Data and Analytics, Workday

See how Workday builds context as culture

Watch Now →Mastercard delivers “context by design” at scale

Permalink to “Mastercard delivers “context by design” at scale”“Data is key to better customer experiences in the AI era, and Atlan helps us deliver that value. Its open, extensible foundation lets us build apps and govern data from day one.” - Andrew Reiskind, Chief Data Officer, Mastercard

See how Mastercard builds context from the start

Watch Now →Moving forward with context preparation for your enterprise data and AI ecosystem

Permalink to “Moving forward with context preparation for your enterprise data and AI ecosystem”The foundation for successful AI isn’t better models or cleaner data, but richer context. Context preparation transforms AI from brittle experiments into production-ready systems that reason accurately about business problems.

The shift from data preparation to context preparation reflects AI’s fundamental need: not just information, but understanding.

Unlike traditional data preparation, which focuses on cleaning and structuring datasets before training, context preparation enriches data at inference time. It adds semantic meaning, business rules, lineage, temporal validity, and quality signals. This ensures that AI models understand not just what the data is, but what it means in the context of the organization.

Implementing context preparation at scale requires active metadata management, context enrichment workflows, unified context layers and feedback loops. Active metadata continuously learns organizational patterns, feedback loops refine business logic, and unified context layers ensure AI agents operate from the same source of truth as human analysts.

Modern platforms like Atlan embed these practices into pipelines, so that AI outputs are more accurate, explainable, and aligned with business objectives.

Atlan’s context layer transforms data into AI-ready understanding.

FAQs about context preparation

Permalink to “FAQs about context preparation”1. What is the difference between data preparation and context preparation?

Permalink to “1. What is the difference between data preparation and context preparation?”Data preparation focuses on cleaning and structuring raw data for machine learning models. Context preparation enriches data with semantic meaning, business logic, and organizational understanding so AI systems can interpret what data represents. Data prep makes information usable; context prep makes it understandable.

2. Why is context preparation important for LLMs and generative AI?

Permalink to “2. Why is context preparation important for LLMs and generative AI?”Large language models understand language patterns but lack business knowledge. Without context preparation, LLMs cannot distinguish between different definitions of “customer” or “revenue” in your organization. Context preparation provides the semantic layer that grounds AI reasoning in your specific business reality, dramatically reducing hallucinations.

3. What is context engineering?

Permalink to “3. What is context engineering?”Context engineering is the discipline of architecting effective information environments for AI systems. It encompasses designing system prompts, managing conversation history, structuring knowledge retrieval, and maintaining business logic that guides AI behavior. The term emerged in 2025 as the evolution of prompt engineering.

4. How does context preparation improve RAG performance?

Permalink to “4. How does context preparation improve RAG performance?”Context preparation enhances RAG by enriching retrieved documents with validity scopes, temporal bounds, and quality indicators. It ensures retrieval finds semantically relevant information beyond keyword matching, and that augmented context includes the metadata AI needs to assess reliability. Organizations report 50% reduction in hallucinations with properly prepared context.

5. Do I need context preparation if I’m already doing data preparation?

Permalink to “5. Do I need context preparation if I’m already doing data preparation?”Yes. Data preparation creates clean datasets for model training, but generative AI also needs understanding at inference time. Even with perfectly prepared training data, LLMs require context to interpret your specific business terminology, apply your organizational rules, and understand relationships between concepts. Context preparation complements rather than replaces data preparation.

6. What tools support context preparation workflows?

Permalink to “6. What tools support context preparation workflows?”Modern data platforms like Atlan provide context preparation through active metadata management, semantic layers, and business glossaries. These platforms continuously capture context from source systems, enable collaborative enrichment, and deliver context to AI agents through standardized interfaces. Context preparation tools integrate with RAG frameworks, vector databases, and enterprise knowledge management systems.

Share this article

Atlan is the next-generation platform for data and AI governance. It is a control plane that stitches together a business's disparate data infrastructure, cataloging and enriching data with business context and security.

Context preparation: Related reads

Permalink to “Context preparation: Related reads”- Semantic Layers: The Complete Guide for 2026

- Who Should Own the Context Layer: Data Teams vs. AI Teams? | A 2026 Guide

- Context Layer vs. Semantic Layer: What’s the Difference & Which Layer Do You Need for AI Success?

- Context Graph vs Knowledge Graph: Key Differences for AI

- Context Graph: Definition, Architecture, and Implementation Guide

- Context Graph vs Ontology: Key Differences for AI

- What Is Ontology in AI? Key Components and Applications

- Context Layer 101: Why It’s Crucial for AI

- Combining Knowledge Graphs With LLMs: Complete Guide

- What Is an AI Analyst? Definition, Architecture, Use Cases, ROI

- Ontology vs Semantic Layer: Understanding the Difference for AI-Ready Data

- What Is Conversational Analytics for Business Intelligence?

- Data Quality Alerts: Setup, Best Practices & Reducing Fatigue

- Active Metadata Management: Powering lineage and observability at scale

- Dynamic Metadata Management Explained: Key Aspects, Use Cases & Implementation in 2026

- How Metadata Lakehouse Activates Governance & Drives AI Readiness in 2026

- Metadata Orchestration: How Does It Drive Governance and Trustworthy AI Outcomes in 2026?

- What Is Metadata Analytics & How Does It Work? Concept, Benefits & Use Cases for 2026

- Dynamic Metadata Discovery Explained: How It Works, Top Use Cases & Implementation in 2026